Jason Robert Carey Patterson's

Computing Background

Childhood

Like many of my generation, my first computer was a Commodore 64. My family were poor at the time, but my parents were forward-looking and somehow managed to scrimp and save to buy one when I was 12 years old. On it, I learned to play games really well, like a lot of boys that age do today, but I also had many ideas for better games, so I decided to learn to write my own. By reading books and trying things, I taught myself to program in BASIC, spending hours after school every day with the computer hooked up to our family TV screen.

First computer, a Commodore 64

I wrote a few simple games in BASIC (car racing, snow skiing), but I soon wanted to write better games, and BASIC just wasn't fast enough, so I taught myself to program in assembly language (machine code). I saved up to buy books (no World Wide Web back then!) and even hand-entered the code to a complete assembler, linker and sprite editor (with the help of my mother, who would read as I typed), since I couldn't afford expensive commercial programming tools (no open source back then, at least in the mainstream consumer market). As strange and tedious as it sounds (and was), learning machine code and assembly language in that way turned out to be a huge benefit in the long term – that was where I first became interested in the "nitty-gritty" hardware aspects of how computers really worked, especially their processors and graphics systems. I was an impressionable pre-teen, the perfect age for learning, and this was amazing stuff... "unreal", to use the word from those days!

I partially wrote a couple of games in assembly language for the '64, including a sailing game with a "keel design" stage (it was America's Cup fever at the time, with the winged keel having just broken the longest winning streak in sporting history), and a never-completed fighter-jet game – not quite a flight simulator, but close, with an out-of-the-cockpit psuedo-3D view (sometimes called 2.5D). Writing games was a great learning exercise – they're short, simple projects with no serious correctness or reliability requirements, that build skills in a fun way, and are exactly the kinds of projects that would make ideal assignments in a "programming 101" course, although for some reason schools don't seem to use games as their early projects, which I think is a mistake. I also wrote my first pieces of non-game software on the '64, including a simple drawing program controlled by a joystick (no mouse back then!), and a stockmarket charting & analysis program called The Chartist. More than 25 years later, it was still being used, in hugely improved form – it wasn't finally retired until 2014!

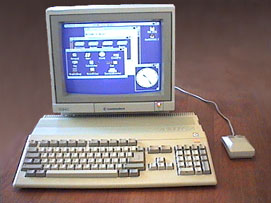

Three years later, my family upgraded to an Amiga, a tremendous step up both in performance and especially functionality, with its amazing, mouse-driven graphical user interface, powerhouse 4096-color graphics, and multitasking OS. This further increased my interest in processors and graphics hardware, as well as kindling an interest in user interfaces and operating systems. I quickly learned 68k assembly language, then the C programming language, again spending most of what little money I had on a C compiler and the amazing Deluxe Paint drawing and 2D/3D animation program, which I also used to do my first video titling and animation work, for home movies and high-school presentations. I wrote a new version of The Chartist for the Amiga that was interactive, producing charts in windows on the screen rather than as printouts. My aborted fighter-jet game from the '64 served as the inspiration for teaching my brother to program in BASIC on the Amiga, where together we had lots of fun writing a 2D scroller fighter-jet game – JetWash – like many popular arcade games of that era.

Second computer, an Amiga

I learned a lot about CPU performance and graphics hardware by writing little graphical demos on the Amiga, which was the graphics pioneer of its day (in the consumer market, at least, way ahead of the black & white Macintosh and lame 16-color IBM PCs). This taught me just how amazingly fast a computer can be when you get everything just right, and how quickly that speed falls away when things are even a little bit off; how important it is to play to the strengths of the memory system and avoid its weaknesses; and when/

Having learned assembly language first, then C, gave me a deep, intimate insight into how compilers work and the machine code they really generate from programming languages. Normal students learning to program rarely learn these things from the bottom up as I did, and that's a great pity in many respects. Learning how the system really works "under the hood" is absolutely invaluable! That's why today I strongly believe all computer-science degrees should have a compilers course as a mandatory part of the degree – it turns hardware and programming languages from "magic" into nuts-and-bolts understanding, and the students come out the other side "enlightened" and far, far better programmers.

I was just getting comfortable programming in C (and really loving it, such a huge step up from assembly!) when I finished high school and was awarded a full scholarship to Bond University, one of only 2 awarded university-wide that year. That was very fortunate, because without a scholarship I could never, ever have afforded to attend an expensive, elite university like Bond...

Bond University (Undergrad)

My computer science degree at Bond University is where I learned much of what I know about computers and programming. My interest and existing background knowledge, not to mention having five and a half years of programming already under my belt, meant everything clicked perfectly.

I really enjoyed the whole degree, especially the courses on data structures, hardware, compilers, operations research (graph/

While at Bond, I was instrumental in getting Internet access for students. The university was reluctant, worried about security due to the paranoia caused by the movie War Games, but I felt strongly that the Internet was the future, so I organized a huge petition pleading with the university to allow students and staff to dial-in from home. This was in 1990, several years before the World Wide Web, and only one year after Australia itself was first connected to the Internet. 9600 baud modems were state-of-the-art (9.6kbps, ie: 1 KB/sec, literally 10,000 times slower than a modern Internet link), and things like the Usenet newsgroups and anonymous FTP were all the rage, and most of all, email. A few years later when the web arrived, Bond was ahead of its time, thanks in no small part to that petition.

Having access to the Internet allowed me to do a bit of open-source development too, including enhancing the hard-disk drivers of my OS kernel (elevator algorithms!) and doing some bug fixing in the GPU driver for my graphics card (anyone remember Godzilla's Guide To Porting The X Server?). This was well before Linux existed, or even the term "open source" for that matter, back when BSD UNIX, the X Window System and the GNU Project were the initial embers, just catching on. It's been amazing to watch open source grow over the years, and in general I'm a supporter (and contributor), although I have conflicting thoughts about open source, especially its commercial viability in the long term.

By the end of the degree, my primary interests were still the same – programming languages, processors and graphics – but I had also been given the chance to explore many other areas, including AI, which I very much enjoyed and double majored in. I even wrote a chess game which debuted at one of the university's open days, along with a simpler tic-tac-toe game which the uni promised anyone $100 if they could beat (knowing all the while that it wasn't possible, of course). It's been great to see AI make major progress in recent times, because AI struggled so much for so long – there was a famous old AI saying: "A year spent in artificial intelligence is enough to make one believe in God" – Alan Perlis. Ironically though, today the public now severely overestimate what is possible with AI, and have little understanding of how it really works, its realistic limits and overall fragility. Reckless early adoption of AI could lead to serious problems, and over-hyping AI technology will lead to, at the very least, mass disappointment, likely causing an AI bust and another AI winter.

My final undergraduate degree project was a visual, programmable microarchitecture simulator and debugger, with a nice graphical user interface, intended to be used to assist in teaching the concepts of instruction-set architecture, microarchitecture and microcode. Unfortunately, it was never used – the RISC revolution made microcode obsolete. Timing was against me there – if I'd done the project just one year later, it would have been based on RISC ideas and useful going forward, not to mention being a simpler project.

Giving valedictory address at Bond

My interest in graphics led me to go further than most students with presentations. I prepared several spectacular 2D and semi-3D animated presentations and displayed them on the huge screens of Bond's lecture theaters. Nothing shows up boring, static slides better than exciting, animated content, on a cinema-sized screen, with backing music! They were so popular that staff and students from other departments often came, just to see "how to really present something well", and those Bond students were already ahead of the pack, using PowerPoint and learning proper presenting skills from a great presenter (Richard Tweedie), at a time when other universities were still stuck on lame, old overhead projectors.

By the time I reached my honors year, I wanted to take on a big project related to compilers and processors, so I chose to write a C++ compiler targeting the MIPS RISC and Motorola 68k CISC architectures. This turned out to be much too big a project, and I ended up working like a slave to complete it on time (8 months). Even then, it ended up only compiling a subset of C++ (a large subset, although C++ circa 1991 was a much simpler language than it is today). Considering the time I had, my J++ compiler did an excellent job of the difficult problem of instruction selection for the Motorola 68040 processor – an extreme CISC design – using a novel, original instruction-selection algorithm I invented, in an otherwise simple, clean, RISC-style code generator. Its instruction selection was competitive with production compilers of the time, showing that it's possible to use low-level, RISC-like intermediate code and still perform excellent instruction selection for older, complex instruction sets (something we see today in projects like LLVM).

Encouraged by Richard Tweedie (Dean of CS) to try to set a record, I took on more than a standard workload and completed the degree early, graduating from Bond in May 1992, less than two and a half years after starting there, setting the national record for the fastest undergraduate honors degree (2.33 years for a 4-year degree), as well as the GPA record at Bond (27 out of a possible 29 high distinctions, 3.9 out of a maximum 4.0, 113/116, or 97.4%).

Winning university valedictorian was a wonderful honor, and something I'm proud of to this day. My valedictory address focused on how computers were changing the world, at a time when computing technology was just starting to make a mainstream impact, but most of the general public neither owned nor used a computer yet – most students still used pen & paper, and most offices still used typewriters. In fact, Bond was actually highly controversial for requiring all students to have their own computer, something I touched on in my speech (in a positive way, of course). 20 years later, Marc Andreessen's famous article Software Is Eating the World said much the same thing, albeit in longer form and with a somewhat more financial angle for his VC and Wall Street Journal audience.

I was also asked to be nominated for Young Australian of the Year at this time, however I declined that offer because I'm not a believer in subjective awards, such as socio-political awards, knighthoods or other such honors. If an award can't be judged in a fair, clearly objective way, then it's likely to do more harm than good IMHO, because for every winner "chosen", there are several other good people overlooked, likely equally deserving. To paraphrase the great physicist Ernest Rutherford, ultimately everything is either mathematics or stamp collecting (ie: objective or subjective), and I prefer to stay on the objective side.

Graduating from Bond was the end of a major chapter. It was over all too quickly, but I had a great time at Bond, and I'll never forget it. Those were probably the best couple of years of my life! Of course, at that point I was still very young. When I gave the valedictory address, for example, I'd only turned 19 just two months earlier. And being that young can be a problem...

Move To America, Or Not?

Alpha, the future of computer architecture in 1992 (or not)

Towards the end of my undergraduate degree at Bond, I was contacted by Bob Taylor from Digital Equipment Corporation's research labs (yes, that one, the guy who created Xerox PARC), with the idea of having DEC fund my PhD – if I moved to the USA. DEC's offer was tempting, both well paid and highly prestigious, with the likely outcome being a position in DEC's research labs as they pushed forth with the Alpha architecture, which looked to be the future of processor hardware at the time (even Microsoft saw this, and ported Windows NT to Alpha).

I struggled greatly with the decision, going back and forth several times, and receiving conflicting advice from the many people I asked, but in the end, I decided to decline the offer of a DEC-funded scholarship to Stanford University. At the time, I didn't really want to move to America. This was partially because I was a loyal Australian and wanted to help build a strong local computing industry, which did seem possible back then, before we understood the chasm/

Today, I sometimes regret that decision. Most of all, though, I regret having to make it at such a young age. That's why I'm very much against accelerated degree programs now. It is possible to get where you're wanting to go too early, and that can be just as problematic as getting there too late. As the book Outliers argues so spectacularly, in life, timing is everything. Had I been 22 years old, as would have been normal, I may well have felt differently, and the entire course of my life might have been very different. Certainly, I would have been far more ready to make such a major life decision.

Family seeing me off at the airport as I

head to America for another conference

Looking back now, of course, we have the 20/20 hindsight of knowing that the Alpha architecture did not succeed commercially, despite being the best-designed architecture with the fastest processors in the world, and indeed DEC no longer even exists as a company, so it's difficult to say exactly where I would have ended up, but it is fair to say things would have been very different.

I tried to make up for not moving to America by flying over to attend all of the SIGPLAN conferences on programming language design & implementation (SIGPLAN PLDI, the top conference in the field), as well as a couple of ASPLOS conferences (another near-top conference that focuses a bit more on the hardware side). It was an incredible strain on my meager finances, but I always found the conferences to be highly enjoyable, and I especially relished the chance to interact with other people "like me". In many ways, it's a great pity the conference system is fading away today, replaced by the web, which is certainly faster and vastly cheaper, but also much less personal.

Queensland University of Technology (PhD)

Instead of moving to America, I chose to do my PhD at QUT under John Gough (Dean & acting Pro Vice Chancellor). At that time, QUT had the leading languages/

My PhD research went very well indeed, focusing on the area of code optimization (making software run faster), in particular by direct, whole-program optimization of executable files. My thesis (dissertation), entitled VGO – A Very Global Optimizer, was met with great acclaim from its examiners, including this fantastic quote by Charles Fischer, author of a leading textbook and former editor of TOPLAS, the premier journal in the field...

This is quite simply the finest Ph.D. dissertation in experimental computer science that I have ever read. The quality of the writing is that of a polished research monograph. The quality of the research, both in depth and breadth, is extraordinary. The author displays a knowledge of compiler theory and practice worthy of a senior don, far beyond someone just establishing his credentials. — Charles Fischer

Most of my PhD work remains confidential, as I may wish to commercialize the work and release a product from it, depending on how the future of computer architecture turns out. It's all in the low-level technical details and industry trends & turns, and for now it's not the right time, but there is the real possibility of a serious chance to "change the world", so I'm not going to give it away!

John Gough, PhD advisor

One major piece of the work that is not confidential is the value range propagation algorithm, which I invented in 1994 and presented as a paper at the SIGPLAN PLDI conference in 1995. That algorithm is now used by many, possibly even most, production compilers, both for branch prediction and for other value/

In keeping with my high-end presentation style from Bond (and high school, and later geology/

My PhD thesis included three "reader background" appendices, intended to ensure readers would understand and "get" what I was talking about, not be struggling with the basics or terminology of the modern compilers & hardware world. Due to surprising, unexpected popular demand, those appendices have since been extracted into separate articles and gone on to lead independent lives of their own.

One of those articles, Modern Microprocessors – A 90-Minute Guide! has grown and grown in popularity over the years, and is now one of the world's most popular and widely used introductory articles on processor design & microarchitecture, with well over a million people having read it! It's often in the Google top 10 for the word "microprocessors", and has even been as high as number 2. If it was a book, I'm told it would officially qualify as a best seller, though I think that's a bit of an exaggeration. Still, it's amazing to know that article has now been read by far more people than belong to the ACM, the world's association for computing professionals.

The article is updated now and then to keep it current, and is used by university courses around the world, as well as being "required reading" for many VC startups in Silicon Valley. And to think, I almost didn't include it in my thesis at all, thinking everyone who wanted to probably already knew that stuff!

The other 2 introductory articles, Basic Instruction Scheduling (and Software Pipelining) and Register Allocation by Graph Coloring, are far less widely read, but are nonetheless used by some university compiler courses, because they're better than most textbook coverage. It's nice to know people must really like my writing style!

Lighterra

Part way through my PhD, when I began to feel my work had real commercial potential, I decided to switch my PhD to part-time and start my own small company, Lighterra (originally called PattoSoft, first named back when I was 12 years old after my nickname, "Patto"). The idea was for the company to provide an income via some sideline system administration and networking work, while allowing me the time to pursue the commercialization of my PhD research.

At that time (late 1995), the World Wide Web was just beginning to grow, and I'd been completely blown away by seeing Lynx, a simple text-only web browser, 18 months earlier. I felt sure the web was the next big thing, which was confirmed by the release of Mosaic and then Netscape over the next months. Recognizing the opportunity, I helped my parents start a web-design company, and set myself up to do system administration and networking work for their customers, while I simultaneously completed my PhD research and commercialized it.

That was the plan, anyway. Unfortunately, things didn't go quite to plan. Once again, timing wasn't on my side.

What began as a software company with a small networking and system-administration sideline, quickly morphed into what was, effectively, a networking services business. Far from allowing plenty of free time to develop and commercialize my PhD research, the explosive success and ongoing, rapid evolution of the World Wide Web resulted in me being swamped with in-demand, time-critical but uninteresting work instead, leaving little or no spare time. Technically, the work focused mainly on networking, cybersecurity and UNIX system administration (primarily Solaris & Linux), but it also included custom software development for tasks such as enhancing internal search engines, hardening email daemons, customizing content caching/

Installing server at PEER1 data center

in LA as part of HostEverywhere CDN

With great effort, I was able to squeeze in the time to complete my PhD by the end of the year 2000 (officially Feb 2001), but I continued to be swamped by other work, and precious little was being done toward commercializing my PhD. I was still determined to finish and release a product from the work, but I just couldn't get the time. The whole situation was deeply frustrating and depressing, and if it hadn't been for the book Learned Optimism, I may well have given up on everything at that point. Years went by, and I hung on hoping things would improve, but nothing changed – sys admin was very different back then from how it is today, with so many more tools and so much more automation, things that we were just building a generation ago!

Eventually, something just had to give, so 10 years after finishing my PhD, and after a decade and a half of mainly system administration and cybersecurity (grrr!), I decided a major course correction was necessary. Initially, I tried writing an iPhone app, which was both well-liked and highly rated (4.5 stars), but not financially viable (like most apps, sadly). Following that, and given my skills and qualifications, I considered taking a teaching/

Digital Reflections

Taking the senior partner role at Digital Reflections, which by that time had a long history of web design mostly for mining companies, and pivoting it to 3D graphics and data visualization for the mining industry, allowed me to cease providing sys admin, networking & cybersecurity services for most customers, phasing out my HostEverywhere CDN, while giving me an income from interesting 3D graphics work, largely part-time, and leaving time to actually pursue my real work, for the first time in years!

Despite being only part-time and not my primary focus, the 3D geological data-visualization work of Digital Reflections did require writing several new 3D graphics and GIS utilities, taking drilling, magnetics and other geophysics and geochemical data and turning it into 3D models, to use in animations to show the size, shape and grade of mineral deposits – from spreadsheets to a nice 3D visualization!

Naturally, my long-time inclination toward exciting, flashy graphical presentations was ideal for this, but the work did require honing my semi-decent 3D modeling & animation skills, initially with LightWave, famous for being used for the various Star Trek TV series, and later with Blender, the open-source newcomer currently displacing other 3D modeling & animation tools in Hollywood.

Digital Reflections has now become a well-respected name within the mining industry, as a behind-the-scenes provider to many of the industry's success stories. Its 3D animations are a common sight at mining conferences and on mining company websites. Mining isn't the most exciting field, but it is Australia's largest industry (world's largest producer of iron, second largest of gold, and third of lead & zinc... and I never work on fossil fuels, having been 100% green energy since 2002). The 3D animations really are quite interesting, considered the "best mining videos I've ever seen", and they've lead to quite a few new mines, as well as saving some old mines worth billions of dollars! I encourage you to check them out if you're interested...

Miscellaneous Tidbits

- Color Inkjet Printer Drivers

- In 1994, I was partially involved in the development of the early color inkjet printer drivers (for GhostScript), in particular their dithering algorithms (to create the illusion of millions of colors from just the 4 CMYK ink colors), yet I went paperless in 2002 and haven't owned a printer since.

- Twitter App

- In 2008/9, I wrote a Twitter app, yet I have never been into social networking, and I'm still not today. Why write such an app then? It was the standard way to learn iPhone programming back in the day, before Twitter closed its API (and long before it changed to its current form).

- High-DPI "Retina" Images

- In 2012, I was the author of the

highsrcproposal for adding high-DPI "retina" images to the web, for then-new devices like the iPad 3. The final W3C/WHATWG chosen solution, srcset, was quite similar tohighsrc– an attribute to the<img>tag, not a whole new tag or new image file format, like other proposals. So every time you see a high-DPI image on the web, I played a small part in that. - Video Encoding

- In 2012, I was the author of a widely-used article about high-quality video encoding, one of several that were influential in achieving high video quality on the web – you can probably remember when web video such as YouTube went from quite poor quality to quite good. So every time you watch a high-quality video on the web or a streaming service, I played a small part in that.