The History of Computers

During My Lifetime

There is no reason for any individual to have a computer in his home.

Index - 1970s - 1980s - 1990s - 2000s

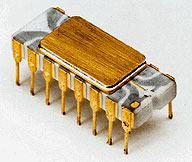

The World's First Microprocessor - The Intel 4004

In 1970 and '71, responding to a request for 12 custom chips for a new high-end calculator, and with incredible overkill, a young startup company named Intel built the world's first single-chip general-purpose microprocessor. Then it bought back the rights for $60,000 (~$320,000 today).

The Intel 4004 ran at a clock speed of 740 kHz and contained 2300 transistors (see chip photo). It processed data in 4 bits, but it used 12-bit addresses, so the 4004 addressed up to 4 KB of program memory, along with 640 bytes of separate data memory. It had sixteen 4-bit registers and ran an instruction set containing 46 instructions, each taking either 8 or 16 clock cycles to complete, yielding performance of about 60,000 instructions per second (92,000 peak). This made it roughly equal to the original 1946 ENIAC in the size of a fingernail, at a time when the CPUs of most computers still took several large circuit boards.

The 4004 was a very big deal for the young Intel company, which was only 3 years old at the time – it was founded in 1968 by Bob Noyce, renowned for his co-invention of the silicon chip in 1959, and Gordon Moore, of Moore's Law fame. Ted Hoff had the "fairly obvious" idea of building a single-chip general-purpose processor running software instead of 12 custom chips, while Federico Faggin was the lead designer of the processor itself. Faggin later went on to found his own chip company, Zilog, which competed with Intel for most of the 1970s, resulting in Intel attempting to "disown" him and deny his crucial role in the birth of the microprocessor, but if you look closely at the chip photo, you can see Faggin's initials F.F. in one of the corners.

Intel followed the 4-bit 4004 with the 8-bit 8008, then the highly successful 8080, and the hugely successful 16-bit 8086 (and 8088) that formed the basis of the x86 architecture still in use today.

UNIX and The C Programming Language

Meanwhile, at the other end of the industry, a talented programmer at AT&T Bell Laboratories named Dennis Ritchie created the C programming language, based on the earlier B language of his Bell Labs colleague Ken Thompson, which itself was based on BCPL, a language originally designed for writing compilers for other languages. C was far from being the first high-level language, but its low-level approach and use of memory addresses as pointers made it the first language which could completely replace machine-specific assembly language programming, even for the internals of an operating system. C was thus the first systems programming language – no longer was an operating system tied to a particular piece of hardware.

Ritchie and other programmers at Bell Labs had been involved in writing Multics – the ultimate operating system – in combination with colleagues at MIT and General Electric. In a rebellion against the size and complexity of Multics, Thompson, Ritchie, Brian Kernighan and several others began writing the simpler UNIX operating system when AT&T withdrew from the faltering Multics project. UNIX took the multi-user and multitasking ideas from Multics, but used a drastically simplified yet far more flexible process model – fork() and exec() – and added a revolutionary new filesystem designed by Thompson. Other Multics concepts such as I/O redirection were also simplified and generalized, leading to new concepts like pipes of data between commands, which in turn encouraged a highly modular shell environment with many small, simple utilities used in flexible combinations. Thanks to the C language and the system's modular and minimalist design, only two years were needed to develop the initial core system software. AT&T, which was prevented from entering the computing market due to its antitrust agreement, decided to license the code to universities and companies at little or no cost. Much of the AT&T code was later rewritten and expanded upon by Bill Joy at UC Berkeley to create the BSD UNIX variant, adding features such as the vi text editor and TCP/IP networking support ("BSD sockets"). The superior but different BSD UNIX distribution unintentionally started a compatibility battle between the two variants which lasted more than a decade and became known as the "UNIX wars".

The C programming language went on to become the dominant language for both system software and application development in the 1980s, across all operating systems, but UNIX and the C language were intimately tied from the very beginning – the C standard library was essentially the original UNIX operating system API. Several of the key features of UNIX – multitasking (which was called "time sharing" back then), virtual memory, multi-user design and security – did not reach the personal computer market for another fifteen years, and didn't reach the mainstream IBM PC for more than twenty.

The Floppy Disk

Invented by Dave Noble and Al Shugart at IBM, the first floppy disk was introduced in 1971. The basic technology of a floppy plastic disc coated with iron oxide and enclosed in a protective cover went on to become the dominant data exchange technology for almost three decades.

Compared to other technologies, floppy disks caught on very quickly, probably due to their low cost. The original 8 inch disk soon gave way to smaller 5.25 inch diskettes, which were adopted as an industry standard that lasted until the mid-1980s. Floppy disk drives were available for the very first generation of personal computers, although they were an expensive extra-cost external device rather than built-in.

By the mid-1980s, 3.5 inch floppy disks with hard plastic protective covers became the standard, typically with capacities of 1.44 or 2.88 MB. Due to the high cost of hard disk drives at the time, it was commonplace in the '80s for personal computers to boot and run applications from one floppy disk while using a second floppy disk to store user files (sometimes in a second drive, often just by swapping disks). Slimming down the OS and application(s) to fit everything onto a single floppy disk was a real art form in those days.

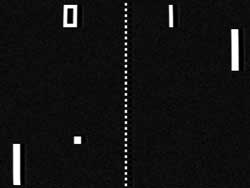

Pong - Video Games Arrive

In 1972, Atari introduced the first commercially successful video game – Pong. Based on ping pong (table tennis), Pong was a simple game which hooked up directly to a television set. Each player used a controller dial to control the vertical position of a bat on the screen, and a ball bounced between the two bats, possibly bouncing off the top or bottom of the screen in the process. This was groundbreaking stuff, and paved the way for the multi-billion-dollar games industry.

Pong was created by Al Alcorn, based on the nearly identical table-tennis game Atari's founder Nolan Bushnell had seen on the Magnavox Odyssey games console designed by Ralph Baer. This prompted a swift legal case from Magnavox, which was settled out of court for $700,000 (~$3.7M today). The commercial success of Pong in turn resulted in many copies from other companies, including Mattel, Activision and Nintendo, all of whom were also sued and ended up paying royalties, yielding almost $100 million (~$400M today) for Magnavox.

The avalanche of Pong clones ultimately inspired Atari to create a far better, more flexible games console based on a microprocessor and software rather than hardwired circuits – the Atari 2600 (released in 1977). Thanks to the flexibility of software, the Atari 2600 was able to run many different popular games over its lifetime, such as Space Invaders and Pac Man, pushing sales of the console to more than 10 million units, with highly profitable games software sales even greater (over 7 million copies of Pac Man alone), making Atari the dominant games company of the late 1970s and early '80s.

The Winchester Hard Disk Drive

In 1973, IBM introduced the first sealed hard disk drive, the IBM 3340 Winchester, a dramatic improvement over earlier hard-disk storage systems which were cumbersome, sensitive, fragile devices the size of washing machines, with removable platters. Moving to a sealed mechanism with the platters and heads included together as one closely coupled unit required far less careful handling from users, as well as allowing a simpler head design with a much lower flight height – the head simply rode on the thin film of air naturally created by the spinning platter, just 18 millionths of an inch thick!

The 3340 hard disk drive was originally planned to use two 30 MB spindles, and was thus named the "Winchester" after the famous Winchester 30-30 rifle ("the rifle that won the west"), although the final product actually shipped with either one or two 35 MB spindles, for up to 70 MB of storage capacity. It offered a seek time of 25ms and a transfer speed of 885 KB/sec.

Over the following two decades, sealed hard disk drives (sometimes called Winchester drives) took their place as the primary data storage medium, initially in minicomputers, then in mainframes, and finally in personal computers starting with the IBM PC/XT in 1983. Over the lifetime of the technology, disk capacity improved by several million times, with single hard disks able to store terabytes of data, while prices consistently declined year after year, resulting in one of the greatest overall improvements in price/performance of all time.

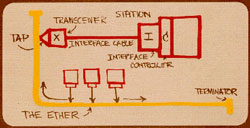

Ethernet - Local Area Networking

Although the concept of long distance, point-to-point packet-switched networking had already been developed as part of the ARPANET project, the idea of a local area network didn't become a reality until Bob Metcalfe invented Ethernet at Xerox PARC in 1973.

Metcalfe had previously worked with the ARPANET. In fact, use of the ARPANET was the topic of his PhD, but Harvard rejected his dissertation on the grounds that the work was not theoretical enough. While at Xerox PARC he then read a paper about the ALOHAnet network at the University of Hawaii, and was inspired to write a new thesis analyzing and improving on the ALOHAnet design (this second thesis was accepted). Due to the cost of laying cables between the Hawaiian islands, ALOHAnet used a shared medium – the radio spectrum – for communication between several different computers at once, rather than having each computer only talk directly with a computer at the other end of a wire. Random back-off and retransmission was used in the event of collisions if two computers happened to send packets at the same time. This became the basis of Metcalfe's idea for Ethernet, which implemented the same shared-medium approach and his improved collision detection and retransmission algorithms, but over a physical wire – the "ether" – which went past all computers on the network, much like a bus in a computer design.

Ethernet had a lot going for it, including the "triple threat" – it was simple, it was fast, and it was cheap because it used common coaxial cables. As a result, it quickly became the dominant local area networking standard for mainframe and minicomputer systems, as well as the early UNIX workstations and PC-based office networks. Adoption was helped considerably by Metcalfe leaving Xerox PARC to found 3Com, which made Ethernet chips and expansion cards for popular systems such as the IBM PC and VAX minicomputers. Metcalfe also persuaded DEC and Intel to work with Xerox and promote Ethernet as a unified front against IBM's Token Ring. By the early 1990s, personal computers started coming with Ethernet as a standard feature, and by the late '90s it was almost mandatory.

To reduce the cost of cabling even further, alternative cabling systems were introduced over time, including thinner coaxial cable and ultimately low-cost, unshielded telephone-style twisted pair cabling, which allowed a star-based topology that was better suited to wiring up office buildings. Over time, the speed of Ethernet was also increased, first from 3 Mbps to 10 Mbps, then 100, 1000, 10,000 and onwards. Almost 30 years after its creation, Ethernet even made its way back to its radio-spectrum origins, in the form of WiFi.

The First Personal Computer - The Altair

Photo © UC Davis Computer Science Club

The Altair wasn't technically the first "personal computer", but it was the first to grab any real attention. Designed by Ed Roberts, the Altair launched as the cover story of Popular Electronics magazine in January 1975, and an astonishing 2000 Altairs were sold that year – more than any computer before.

It was named after a planet from a Star Trek episode, and cost only $439 (~$1800 today), but it was hardly a computer at all even by the standards of the day – the Altair was a kit which you had to build yourself! It was based on Intel's 8-bit 8080 processor, and included 256 bytes of RAM (expandable to 64 KB), a set of toggle switches, an LED panel, and that's all. If you wanted anything else – like, say, a keyboard, screen or floppy disk drive – you had to add expansion cards!

Although the general public showed absolutely no interest in the kit approach, fortunately the high-tech enthusiasts of the time were more than willing to build and expand the new machine themselves, and Altairs with added RAM and a floppy drive became the early de-facto standard for microcomputers, forming the basis of hobbyist computer clubs such as the Homebrew Computer Club in the San Francisco Bay area, which was rapidly becoming known as Silicon Valley.

Two bright and ambitious young programmers, Bill Gates and Paul Allen, saw huge potential in the new machine and offered to write an interpreter for the simple BASIC programming language for Altairs with at least 4 KB of RAM, to allow enthusiasts to explore programming rather than just assembling hardware. Although it crashed the very first time it was demonstrated, Gates and Allen felt the product's potential, and the potential of software in general on these new personal microcomputers, was so great that both dropped out of college and started a new company, a company focused not on building hardware but on writing software for microcomputers, which they named Micro-Soft (later renamed to Microsoft).

CP/M - An Injustice

Developed by Gary Kildall starting in 1973, CP/M stood for Control Program for Microcomputers and was the first operating system to run on computers from multiple different vendors. It quickly became the preferred OS for software development on the Altair and Altair-like hobbyist systems being built by enthusiasts at the time, based on the Intel 8080 processor (and the compatible Zilog Z80). In the mid-1970s, CP/M looked like it would go on to rule the microcomputer world, but unfortunately the early consumer-ready personal computers such as the Apple II chose not to use CP/M, electing instead to provide an interpreter for the BASIC programming language as their primary "operating system".

Several years later, CP/M should have been the operating system of IBM's upcoming personal computer, the IBM PC. Seeing the success of the burgeoning personal computer market, IBM sought out Gary Kildall to port CP/M to their upcoming system, which was based on the somewhat different, 16-bit Intel 8086 processor. Unfortunately, Kildall didn't grasp the importance of the meeting, expecting IBM's PC to be too expensive and a commercial failure, and left the negotiations to his wife and business partner while he and a friend flew Kildall's private plane on a parts errand. Accounts differ regarding the details of the IBM encounter, but Kildall's leaving reportedly upset the IBM representatives. When IBM then insisted on a non-disclosure (secrecy) agreement being signed, and Kildall's attorney advised against it, the talks broke down altogether. Gary Kildall returned hours later and tried to reach an agreement, but the damage was already done.

In the end, IBM contracted the young Microsoft company, known for its BASIC interpreter, to provide the operating system for the IBM PC. Microsoft purchased Tim Paterson's CP/M-like QDOS as a starting point, and as a result many of the features of CP/M made their way directly into Microsoft's PC-DOS (later renamed MS-DOS), including "8.3" filenames, the use of letters to identify drives (A: etc), and the names and syntax of most of the main commands and system calls, many of which Microsoft admitted were clean-room reverse engineered. This prompted Kildall to threaten IBM and Microsoft with legal action, but at the time it was concluded that intellectual property law regarding software was too unclear. IBM dodged the issue by agreeing to offer CP/M as an alternative at the time of sale of every IBM PC, whilst simultaneously guaranteeing its failure by pricing CP/M six times higher than the nearly identical PC-DOS.

Although widely recognized as an injustice within the computing industry, Kildall was forced to endure constant comparisons between himself and Bill Gates in the mainstream media for the rest of his life, and never fully recovered from the "went flying" incident.

Vector Supercomputers - The Cray-1

Photo (cc) Rama, Wikimedia

In 1976, Seymour Cray introduced the Cray-1, by far the fastest computer in the world at the time. Not only that, but the Cray-1 had the historically unique distinction of being simultaneously the fastest computer in the world, the most expensive, and the computer with the best price/performance (at least for floating-point scientific code). Viewed from above, the Cray-1 looked like the letter C, in part to keep all of the circuit boards as close together as possible to boost performance.

The Cray-1 ran at a clock speed of 80 MHz and was an extensively pipelined RISC-like design, similar to Cray's earlier CDC 6600 and 7600, and very much unlike most other computers of the day. It could be outfitted with up to 8 MB of RAM, requiring hundreds of densely packed circuit boards each containing 288 chips, and offered scalar performance of around 80 MFLOPS and vector performance of 136 MFLOPS (250 peak). The freon-cooled Cray-1 introduced a number of technical firsts, chief among them an auto-vectorizing FORTRAN compiler to make use of the vector instructions without programmers having to parallelize their code by hand in many simple but common cases, and a vector-register architecture consisting of eight 24-bit integer/address registers, eight 64-bit scalar data registers, and a set of eight 64-element, 64-bit vector registers. The use of vector registers greatly improved performance by reducing memory reads and writes compared to earlier memory-to-memory vector designs, allowing code to perform several compute instructions on the vector data once it had been read from memory into the CPU.

The most important practical feature of the Cray-1, however, was simply its fast normal, scalar performance – the Cray-1 wasn't the first vector supercomputer, but it was the first to have fast non-vector performance as well. This meant the Cray-1 did everything fast, and was probably the most significant reason for its success – some customers bought it even though their problems were not vectorizable, purely for its fast normal, scalar performance. Whatever the reason, the Cray-1 was the first commercially successful vector supercomputer, selling almost 100 systems priced at more than $5 million each (~$20M today), and creating a whole new market for high-end vector machines and vectorizing compilers.

The Apple II - Personal Computers Arrive

Photo (cc) Mémoires Informatiques

Steve Wozniak's Apple II was really the beginning of the personal computer boom. Loosely based on his Apple I hobbyist system, which he had demonstrated at the Homebrew Computer Club and which friend and Apple co-founder Steve Jobs had encouraged "Woz" to sell, the much more polished Apple II debuted at the first West Coast Computer Faire in San Francisco in 1977. With a built-in keyboard and graphics support, and with the BASIC programming language built into ROM as the "operating system", the Apple II was actually useful right out of the box!

The Apple II was based on the low-cost MOS 6502 processor and had color graphics – a huge innovation at the time, and the reason for the colorful Apple logo. In its original configuration with just 4 KB of RAM it cost $1298 (~$4700 today). A year later this was increased to 48 KB with the introduction of the Apple II+ which made writing useful software much more practical. To keep the cost reasonable, the Apple II initially used an audio cassette drive for storage, but the tape drive didn't work very well and most Apple II owners bought the floppy disk drive when it was released in 1978 at the amazing price of just $595 (~$2000 today), thanks once again to Wozniak's brilliantly frugal engineering.

The 8-bit MOS 6502 processor was chosen not because it was powerful, but because it was cheap. Its lead designer, Chuck Peddle, recognized the world was crying out for an ultra-cheap microprocessor, and when it was introduced in 1975 at an incredibly low $25, the 6502 was a real bargain – so cheap, in fact, that some people thought it was a hoax (compared with $179 for Intel's 8080 and $300 for the Motorola 6800). The 6502 contained 3510 transistors (see chip photo) and ran at 1 MHz. To keep the size – and therefore cost – to a minimum, it only had 3 registers (accumulator, X and Y) plus a stack pointer, but this was acceptable because at that time accessing RAM was almost as fast as the microprocessor's registers anyway, so it was simpler and more economical to just rely on RAM access. To support this, the 6502's instruction set contained 56 instructions which used 9 quite flexible addressing modes. For a whole generation of programmers (myself included), 6502 assembly language was the second programming language they learned (BASIC was the first). Fifteen years later, the 6502 was still being used, in the Nintendo Entertainment System.

Over its lifetime, the Apple II series sold more than 5 million units, an absolutely unheard-of number for a computer. It almost single handedly kick-started the personal computer revolution, ushering in the era of mass-market consumer adoption. Within a couple of years, it became commonplace to see computers – Apple IIs – in the real world for the first time, in homes, offices and schools, places where a computer would never have been thought of just a few years earlier.

The Commodore PET

The PET was the first in a line of low-cost Commodore computers designed to bring computing to the masses. Commodore had previously been a calculator company, and from that market it had learned – the hard way – the critical importance of price, having been undercut by its own supplier when Texas Instruments chose to enter the calculator market directly. When the low-cost MOS 6502 processor appeared on the scene, Commodore's founder Jack Tramiel seized the opportunity and bought MOS Technologies outright, then launched the PET to compete with and undercut the Apple II.

Just like the Apple II, the PET came with 4 KB of RAM, provided a BASIC interpreter as the "OS", and used an audio cassette drive for storage. The major technical weakness was that the PET only had a monochrome, text-based screen, with no bitmapped graphics support at all, ruling out anything beyond the most primitive of games. The computer, keyboard, cassette drive and screen all fit within a one piece trapezoidal unit, giving the PET a distinctive and high-tech look which belied its technical inferiority. The slick look wasn't enough to save it, though. Ironically, Commodore could simply have bought Apple a few months earlier – Wozniak and Jobs had actually offered to sell-out to Commodore for "a few hundred thousand dollars", but Tramiel had declined, thinking Commodore could design an equally good machine themselves.

At $795 (~$2900 today), the PET was almost half the price of the Apple II, but even at that low price it was only a mediocre success and failed to seriously challenge the Apple II's dominance due to a combination of Apple's technical edge and superior marketing, along with the PET's poor third-party software line-up, especially in the games category. Nevertheless, Commodore was definitely onto something with the low-cost approach, as evidenced by the PET's successors – the wildly successful VIC-20 and the blockbuster Commodore 64 – which took the PET's basic design and added impressive, games-oriented graphics hardware, whilst removing the screen itself to reduce cost even further.

The Radio Shack TRS-80

Still in 1977, the TRS-80 (nicknamed the "Trash 80") was the third of the first three consumer-ready personal computers. The base unit was essentially a thick keyboard containing a Zilog Z80 processor (compatible with the Intel 8080), 4 KB of RAM and 4 KB of ROM (which included a BASIC interpreter), and other support chips. An optional but almost essential external box allowed memory expansion. Like the Apple II and Commodore PET, software was distributed on audio cassettes, this time played in from standard Radio Shack cassette recorders.

In a cruel twist of irony, while the original Altair would probably have done far better as a fully assembled system, the TRS-80 might actually have been more successful as a kit. It had a small but loyal following, particularly among Radio Shack electronics kit enthusiasts, because it was sold in all 3500 Radio Shack and Tandy stores worldwide and advertised in the popular Radio Shack catalog. In fact, the TRS-80 was originally envisioned by designer Don French to be a kit itself, designed to appeal to Radio Shack's bread-and-butter electronics enthusiasts. In the general consumer marketplace, however, the TRS-80's monochrome screen and lack of true bitmapped graphics meant it was unable to run anything more graphical than rudimentary character-cell "semigraphics" games – a fatal weakness. Like the Commodore PET, the TRS-80 was soundly defeated by the graphics-capable Apple II, and later by the graphics-rich VIC-20 and Commodore 64. Unlike Apple or Commodore, Radio Shack never produced a next-generation successor to the TRS-80, instead evolving it only marginally before exiting the computer market.

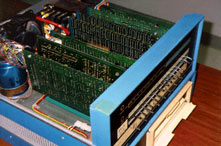

Digital's VAX - The Minicomputer Revolution

Although UNIX started life on the PDP-11, Digital Equipment Corporation's VAX was the architecture at the heart of the UNIX and VMS minicomputers which caused the demise of the mainframe. VAX stood for Virtual Address eXtension to the PDP-11, and was a large and complex 32-bit CISC architecture. DEC built many different processors which implemented the VAX architecture during its 20 year lifetime, using a wide range of technologies, from multiple circuit boards initially (see internals photo) down to single chip microprocessors a decade later. VAX represented a major milestone in the history of computer architecture as a whole, underpinning a wider variety of products than any previous instruction-set architecture by far – VAX-based systems scaled up to huge departmental mini-superomputers, and down to small, inexpensive desktop workstations. Despite this diversity, the VAX architecture remained surprisingly stable throughout its lifetime, a testament to its original design.

VAX is generally regarded to have been the ultimate CISC architecture. Designed by Bill Strecker, it had a huge number of instructions (over 300), including instructions for string manipulation, binary-coded decimal, and even polynomial evaluation. It was also highly orthogonal, with most instructions able to specify their arguments using any of over a dozen addressing modes, allowing for memory-to-memory-to-memory operations (although there were also 16 registers). The variable-length instructions could conceivably reach as long as 56 bytes. Although flexible, the complex instructions required elaborate microcode implementations "under the hood", and using the more complex instructions was not always the fastest way of doing things. For example, on many VAX processors the INDEX looping instruction was about 50% slower than an equivalent loop of simpler VAX instructions! This "useless, unnecessary complexity" was one of the key inspirations for the simpler RISC architectures which eventually replaced CISC designs like the VAX, starting in the late 1980s. Writing a compiler which did a good job of instruction selection for the VAX was not an easy task.

The first VAX, model 11/780, was introduced in 1977 at an entry price of about $200,000 (~$750,000 today). It had the distinction of being selected as the speed benchmark for "1 million instructions per second" (1 MIPS), even though its actual execution speed was only about 0.5 MIPS. Some people justified this by saying 500,000 VAX instructions were equivalent to a million instructions for most other architectures, but in reality the 1 MIPS label came from the fact that the VAX-11/780 was about the same speed as the IBM 370/158, which IBM marketed as a 1 MIPS machine. The VAX-11/780 became far more popular than the IBM system, so it ended up being used as the base machine for the relative MIPS measure, and later for the early SPEC benchmarks.

Index - 1970s - 1980s - 1990s - 2000s

Unless otherwise noted, all product images shown in this article are either in the public domain or are copyright to the relevant product's creator, owner or manufacturer. All product names mentioned in this article are the trademarks of their respective owners. Mention of product names is for informational purposes only and constitutes neither an endorsement nor a recommendation.